We’re in degree show season again and last Monday Kevin and I went down to see the Central Saint Martin‘s work, and in particular the degree show from the MA in Communication Design.

I was a bit apprehensive about taking an afternoon out to see the show – I’d enjoyed it so much last year I thought my expectations may be too high, but I was delighted again. Here’s a brief summary of what I found.

- The fascination with visualization continues. I saw work visualizing body shape, books, invasions, deaths, clutter, radio transmissions, news, etc. Lot’s to pick up on for my book visualization work and Richard’s network visualization work,

- Some themes emerged – especially visual ones. For example there was a real tension being played out between the old and the new. For example several projects produced artificially pixelated views, while others rendered onto sheets of rusty iron.

- Some things were notable by their absence. There was less screen based work than last year, and hardly any ‘physical computing’. And unlike the Dundee show, where Richard and I saw several surface computing projects, I didn’t see any surface work from CSM (though there is one project by Melanie Sayer on the website that I managed to miss)

There were lots of intriguing visualizations. One of them, David Hernández Méndez‘ map of the American Invasion of Mexico, paid homage to Minard’s famous visualization of Napoleon’s invasion of Russia.

Another looked at overcrowding and household clutter in the homes of refugees before they were re-housed. It’s a lovely piece of thought provoking work, and ties in with Alex’s work on clutter, but I felt the resulting images were too neat, too designed, to adequately sum up the squalor that I think Jamie Buswell was trying to document.

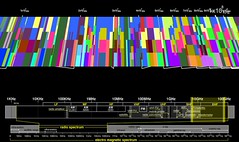

There were several works that might feed into our retired “Revealing the Invisible” theme. One that may prove particularly interesting for our thinking about radio frequency spectrum analysis was Seung-Yong Jung’s plots trying to visualize radio broadcasts across the UK showing broadcast strength and station popularity.

Seung-Yong also had some intriguing radial prints where each row of pixels was printed on a different concentric clear disk so that if one turned the disks the letters emerged and faded from legibility.

But my favourite work of revealing was from one of the Ceramics BA students, Judit Kollo. Judit had produced a wall hanging, built from textured slabs of porcelain. They looked good just as an abstract, their texture almost like a relief map.

But when you turned on the light behind them not only was the difference in thickness between different parts of the tiles revealed, but Judit had also drawn jellyfish on the reverse by pushing pinpricks almost through. Two of them are just about visible in this photo.

The book visualizations were interesting. One of the things Linda and I have been grappling with is how to make the visualization free-standing i.e. how do you include enough information in the design and in the key so that people can start to decode the visualization without needing the designer to explain it or without needing to read a manual. I’m not sure the ones I saw managed, though they were great in other ways.

Laura Sulivan‘s was an interesting series trying to analyse texts from the perspective of the visual principles of information design.

I was lucky enough to be there when Ebany Spencer presented her project to the current first years. Ebany had several visualizations of the story “Flatlands” which I knew as it’s a popular short story among mathematicians. There were several innovations in Ebany’s work. She’d used a stave-like notation where each stave represented a different world in the book. She’d also printed each stave as a foldout hardback book with slots cut in the top and bottom of the pages into which the other stave-books slotted. I also loved the way she’d folded out the paper to produce some 3D in her visualization.

Ebany also had a different goal for her work and a new inspiration from the other book visualizations I’ve encountered. One of her goals was to take a strong editorial role: she seemed not to just want to reveal new things about the structures in the Flatlands story, but also to use those structures to tell a story about the work. Taking inspiration from the marginalia of medaeval illuminated manuscripts was also interesting.

There were several other works in which paper was physically manipulated. Haein Song made “Books of the Absurd” where he attempted to “attain a sense of futility, whilst being immersed in a love of creation”.

In form, though not in motivation, Haein’s work reminded me of Lucy Norma‘s recycled book lightshades that Richard and I saw during last year’s New Designers.

Cutting at paper also figured in some designers projects. Daniela Silva used cut-outs to physically map the interior of the homes she’s lived in.

And Aysegul Turan’s All That Is Solid Melts Into Air was a more abstract look at change through cutting or rubbing through paper (which was also covered in patterns of ink or ash?)

Recycling and the environment received less attention than last year. I’m pleased about that, it’s an important subject but was receiving so much attention from young designers last year that it began to get repetitive. There were still some nice pieces, like Angela Morelli’s maps of global water usage.

Another theme from last year’s shows that had dwindled this year was CCTV. I did see one piece, by Joan Ayguadé Jarque on the BA in Product Design. He’d made a CCTV housing that subtly told people where it was looking, and provided a domed mirror so that people stood underneath it could use it for their own surveillance work.

There were two projects that might be of interest to those studying family collections of media. In one designer Sarah Roesink asked her parents to write down personal memories associated with particular photos and then she made them into elegant bound book. Sarah was on the photography side of the course but I thought her response to the need to individually honour old family memories and photographs was something for us to chew on.

From the opposite perspective Mayuko Sakisaka on the product design BA made a piece called Please keep my secrets, a secure (and beautiful) printer for printing and storing text messages from one’s boyfriend.

Storytelling was picked up again Aris Tsoutsas in his project On The Riverside. But this was almost the opposite of a digital project – the cover sheet was rendered onto a large sheet of rusty iron!

At the MA in Communications Design I was hoping to see more ‘physical computing’ than I did. That said the two projects that I did see with a strong ‘craft’ bent were two of my favourites of the whole day. In “Printed Matter” Evelin Kasikov had embroidered fonts and other design experiments onto card with cross-stitch. She had letters, colour charts, and pixelated phrases. The result was a wonderful evocation of the contrast between the digital and the slower crafts.

Robert Corish‘s “Audio & Visual Evolution” reminded me of several of our projects and was a real explosion of creativity. He had straightforward explorations of randomness that Tuck would have enjoyed

But at the heart of the project were two machines for generating abstract sound feedback loops. He’d used MaxMSP, Arduinos and a host of other stuff to great effect.

Robert was one of the few designers to include his commonplace book (or lab notebook, or day book, or whatever you call the notebooks we carry around) as part of his display and you can see why. His notes reminded me of Stuart’s or Richard’s, in fact he’s included his on his website, hinting towards the work Richard has done with his.

There was so much more I could write about. Definitely worth a visit next year. One project it would be awful to sign off without mentioning was Kacper Hamilton’s Deadly Glasses. Kacper made a wine glass to represent each of the deadly sins, for example the one on sloth had a tap on the bottom and a hanging chain so one could hang it up and lie under it to drink the huge glass, drop by drop. Lust had a frosted glass ball at the end of the hollow stem so that one could drink by licking the base of the glass. Very clever and beautifully executed.